In the rapidly evolving world of Data and Analytics, the ability to interact seamlessly with complex systems is paramount. As a leading professional services and solutions provider in the field, we are at the forefront of harnessing the power of Large Language Models (LLMs) to simplify these interactions. Our communities, and especially the NLP one, are excited to share our expertise through a series of tutorial videos aimed at showcasing the potential of LLMs and Generative AI.

Our first tutorial in this series focuses on the integration of LLMs with the Databricks API, a powerful tool for data analytics. This article provides a detailed walkthrough of the tutorial, demonstrating how we can use LLMs to build a language interface, modify job conditions, manage permissions, and even promote a model into production. Whether you’re a seasoned data scientist or a curious beginner, this guide will offer valuable insights into the capabilities of LLMs and their role in shaping the future of data and analytics.

Introduction to Language Interface with Databricks API

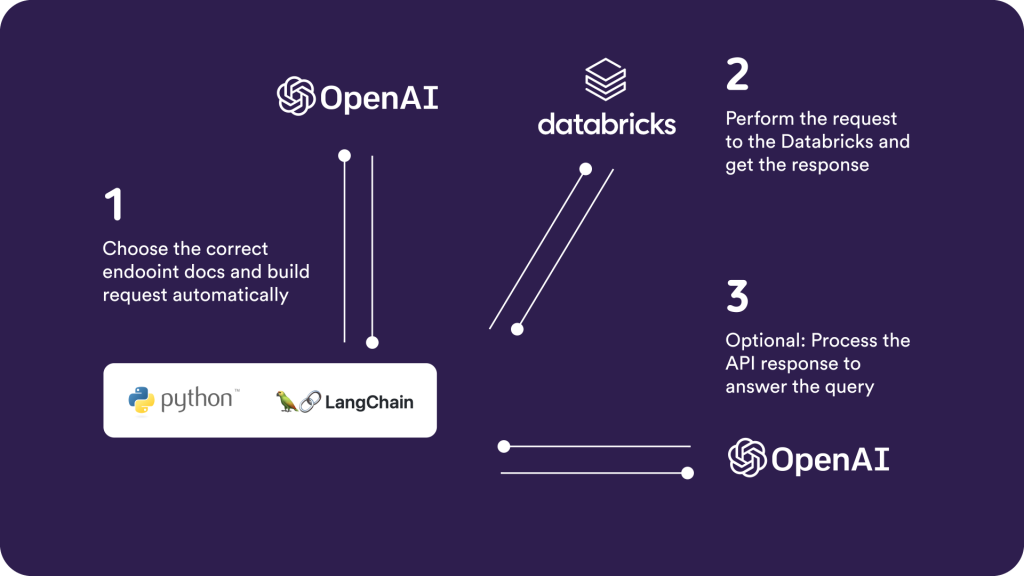

In this first episode, we delve into the fascinating world of LLMs and their application in building a language interface to the Databricks API. Our tutorial leverages a Python-based Command Line Interface (CLI) and the widely used LangChain Library to demonstrate this.

The process involves entering a query in English (Yes – in natural language!), which is then interpreted by the LLM to select the appropriate endpoint documentation. This information is used to construct the request link. This request is then executed, and the response from the Databricks API is received and processed to answer the initial query.

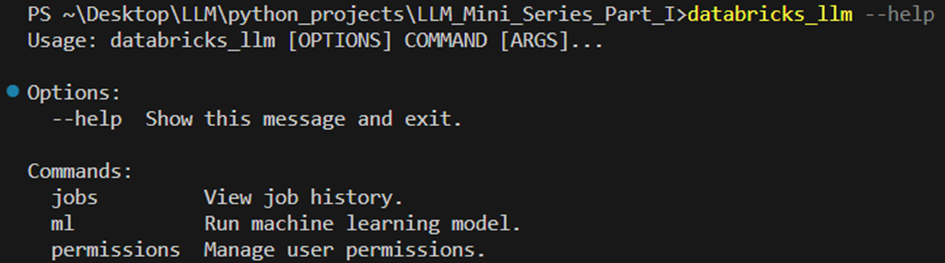

The usage is analog to every other CLI. Here is an overview of the available commands.

Each command offers subcommands, such as the following “get-model-info” for receiving information about a particular model (here: ‘sentiment_classifier’) in the model registry.

Access to the GitHub Repository: https://github.com/PositiveThinkingComp/LLM_Mini_Series_Part_I

In the present tutorial, we talk to the Databricks API in order to:

- Listen and modify jobs

- Change permissions and e-learning functionalities

- Put a model in production

Exploring Other Potential Use Cases of LLMs in API Integrations

The capabilities of LLMs in API integrations extend far beyond what we’ve demonstrated in this tutorial. The ability to interact with APIs using natural language opens up a world of possibilities for various industries and applications. Here are a few potential use cases:

Customer Support Automation

LLMs can be used to automate customer support systems. By integrating LLMs with an API, businesses can create a system where customers can ask questions in natural language. The LLM can interpret the query, interact with the necessary API to retrieve or manipulate data and provide a response in a user-friendly manner. This can significantly improve the efficiency and effectiveness of customer support, reducing wait times and improving customer satisfaction.

Data Analysis and Reporting

In the data analytics domain, LLMs can simplify the process of generating reports and insights. Users can ask the system questions in natural language, such as “What were the sales figures for the last quarter?” or “How many new users signed up last month?”. The LLM can understand these queries, interact with the relevant API to fetch the data, and present it in a comprehensible format. This can make data analysis more accessible to non-technical users and facilitate data-driven decision-making.

Healthcare Applications

In healthcare, LLMs can be used to create more intuitive interfaces for Electronic Health Record (EHR) systems. Healthcare professionals could ask the system for patient information using natural language, and the LLM could retrieve the relevant data from the EHR. This could make it easier for healthcare professionals to access and update patient information, improving the efficiency of healthcare delivery.

Educational Tools:

LLMs can also be used to create more interactive and engaging educational tools. For example, an LLM could be integrated with an API that provides information about historical events. Students could ask the system questions in natural language, and the LLM could retrieve the relevant information, making learning more interactive and engaging.

The integration of LLMs with APIs has the potential to deeply change the way we interact with systems and data. By enabling more natural and intuitive interactions, LLMs can make complex systems more accessible, improve efficiency, and pave the way for new innovation opportunities.

In conclusion, the integration of LLMs with APIs is more than a technological advancement, it’s a step towards making complex systems more human-friendly. As we continue to explore and harness the power of LLMs, we look forward to a future where technology is not just about machines understanding humans, but also about humans understanding machines. Stay tuned for more in our LLM mini-series as we continue to explore the exciting possibilities of this technology.

Access to the GitHub Repository: https://github.com/PositiveThinkingComp/LLM_Mini_Series_Part_I

Subscribe to the Youtube channel: https://www.youtube.com/@Positive_Thinking_Company