The creation of a container

The basis of orchestration is the creation of a container. Often directly integrated, and regularly using images from various sources, it is essential to demonstrate strict deployment hygiene in order to ensure its deployment.

Each organization must depend on strong and rigorous guidelines when it comes to container creation, and adopt a set of best practices and rules necessary to maintain the required quality.

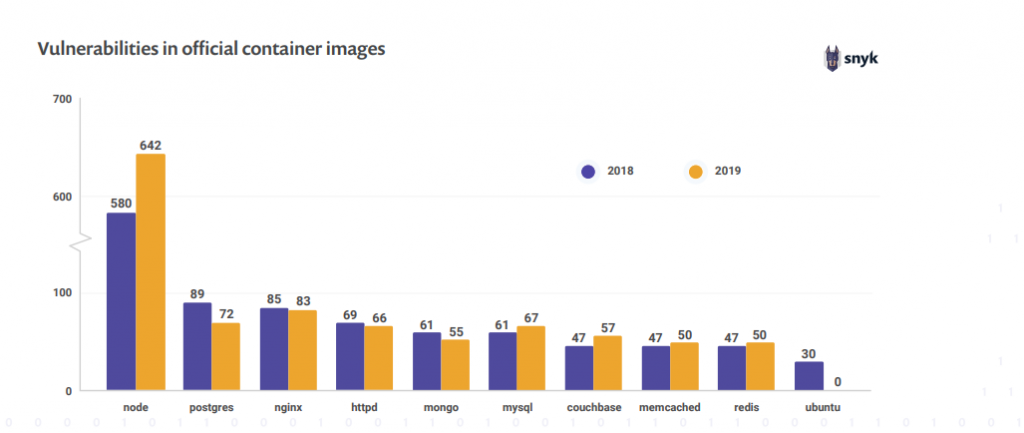

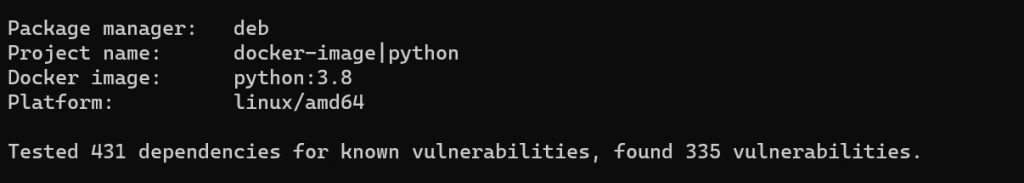

The annual report of snyk (https://snyk.io/open-source-security/) is often quoted as a reference. It shows us that the number of vulnerabilities remains high even on official images in critical condition. In recent years, the trend has been rather positive. Nevertheless, it is essential to take all necessary precautions.

Organizational requirements

There are many debates on many very specific points, which are not only technical, but above all organizational.

For example, we can talk about the differences about updating packages in the build.

RUN yum -y update && yum clean all

This methodology will depend on security, the need for a reproducible build, the way to manage packages, the Tag strategy…

To simplify, we can summarize this to the following two positions:

- The “pro upgrade” for whom the fact that security is critical, requires to take into account this aspect at every moment, without intervention from the Developer side.

- The “pro-reproducibility”, argues that images are non-reproducible, and that there can be differences between two builds that are supposed to be identical. For them, it is more relevant to make a new image with fixed versions, and retest the image correctly.

It remains that a certain number of practices must be put in place, independently of the debates:

- The images must be scanned: This helps to understand the risks.

- The images must be light: In the context of widespread use of the Cloud, this allows to limit the necessary bandwidth (this is especially true for CI/CD).

- Images must have healtchecks: This is the minimum to ensure that the image is working properly at all times.

- Do not have different images depending on the environment: We fall back into the classic “it works for me” which can be very annoying 🙂 .

- Correctly manage secrets and environments.

Use Case

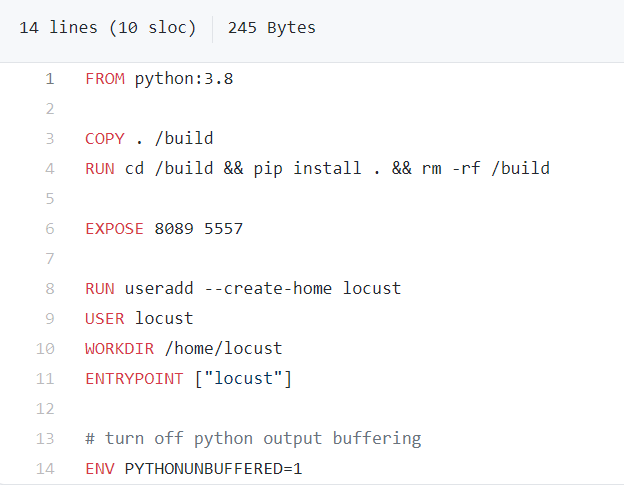

Sometimes an image that seems simple can hide deeper problems.

Extremely simple, this image seems to be rather well studied and does not raise any particular suspicion (no layer, no health check, no set -e, it remains classic). And yet:

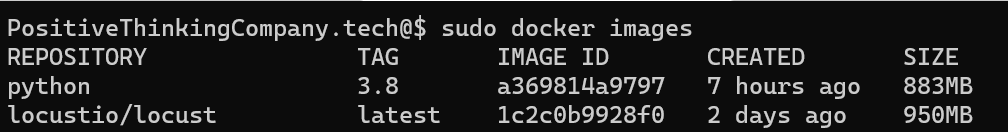

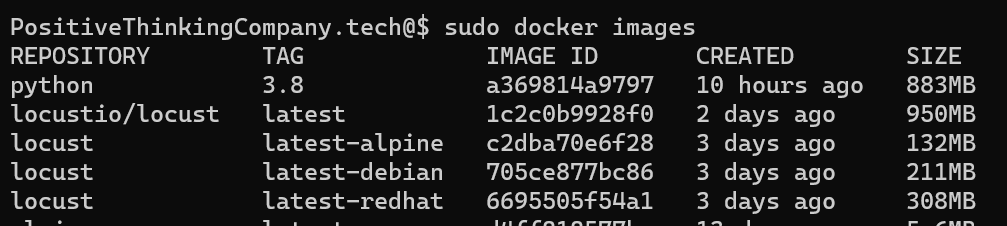

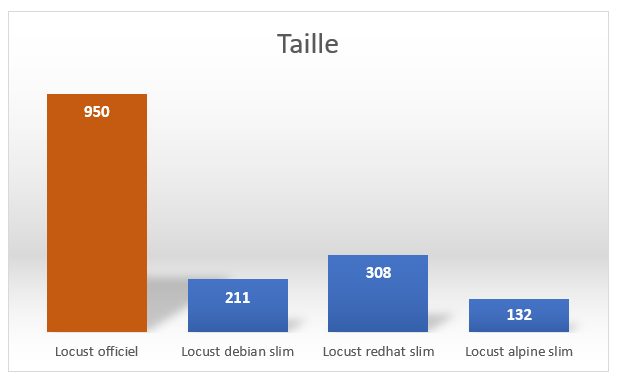

This image weighs 950MB, that’s ~$0.08 per pull in AWS. We’re talking about an image that should potentially be scalable.

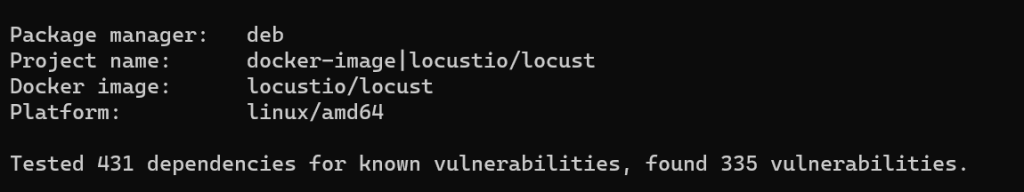

Regarding potential vulnerabilities:

We see that they come mainly from the base image:

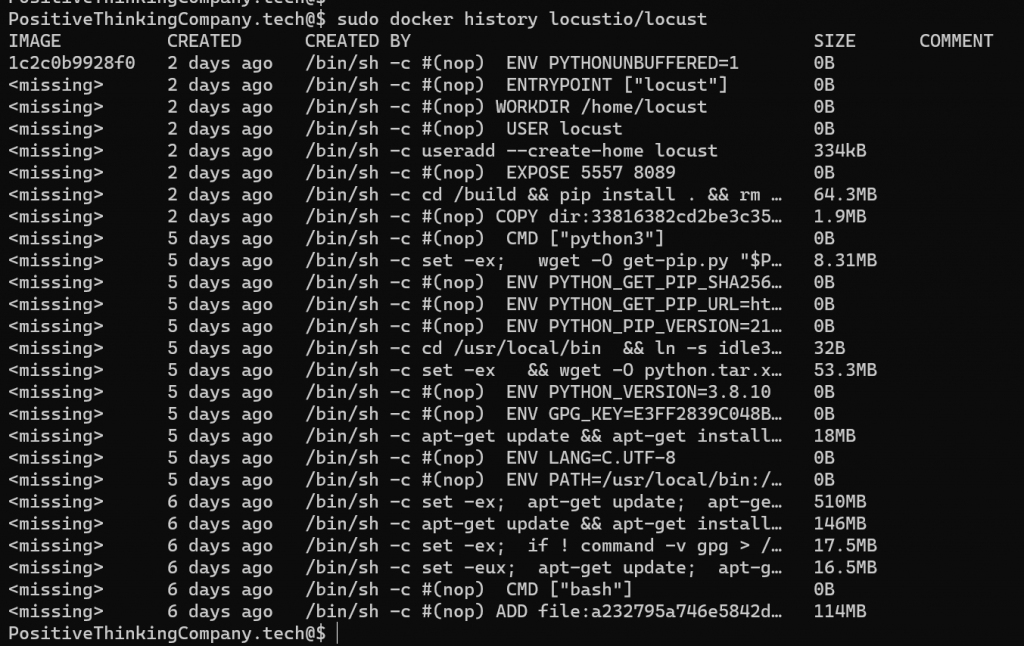

If we look in more detail at the construction of the image, and more specifically at the different layers, here is what emerges:

Let’s quickly analyze this result.

- The base image (debian:buster) weighs 114MB

- Adding the buildpack-deps:buster image installs many tools for development:

- Git

- Subversion

- Curl

- Mercurial (146MB just for this dependency)

- A set of compilation and development tools (510Mb).

The official image is designed for local testing of locust development (https://locust.io/), but for the end user it has several flaws:

- It is relatively heavy. In a deployment that must have some scalability, the image will be downloaded for each node.

- It rebuilds/compiles the code each time.

- It is not up to date in terms of security.

The main flaw comes from the debian/python 3.8 images. It has many tools to download the different python packages needed to use and compile the application. The base image (debian) is “relatively” light, 114MB.

In good practice and to reduce the size of the images, we mainly use these methods:

- The use of .dockerignore (Not used here).

- The use of multi-stage builds (Not used here).

- The aggregation of RUN commands.

- Use of a simple base image.

- The removal of unnecessary interdependent packages.

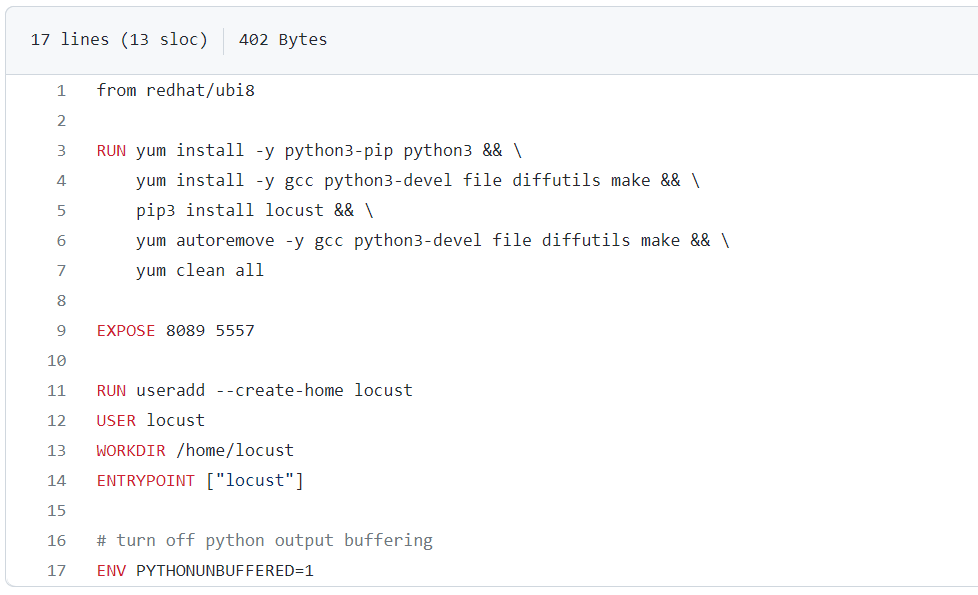

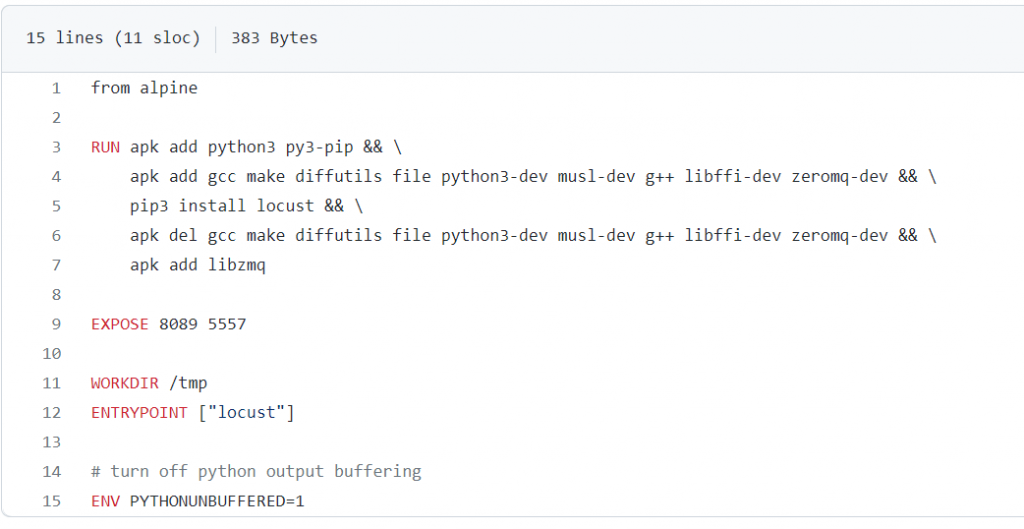

By using simple base images, it is possible to optimize the result quite significantly, either by starting from the same debian base, or by taking an alpine or redhat base.

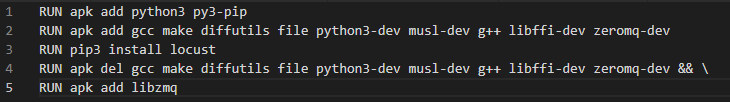

You will notice what seems to be too long command lines, which we commonly find in many images. If for development purposes it can be convenient to separate the different layers as in the following example:

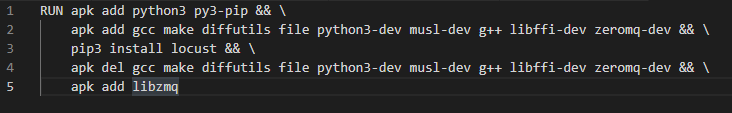

It remains important in the context of finalization, to group the layers using this format:

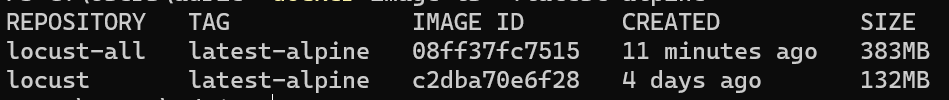

The difference between these two writings is quite ambiguous. The first will create an image more than twice as large as the second (383MB VS 132MB), because of the intermediate layers. Again, in a cloud context, and with the ability to instantiate multiple containers, this is a time and traffic saving that translates into a cost reduction.

For comparison, in terms of size:

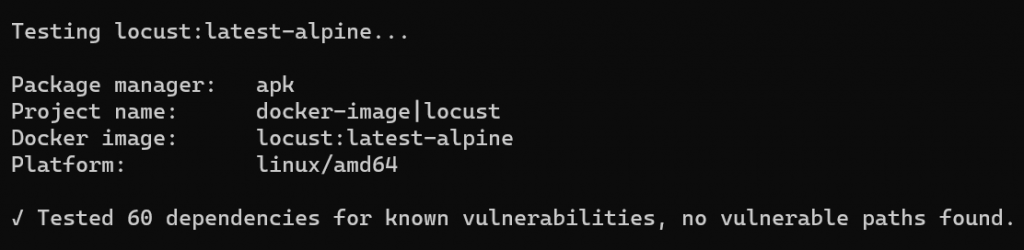

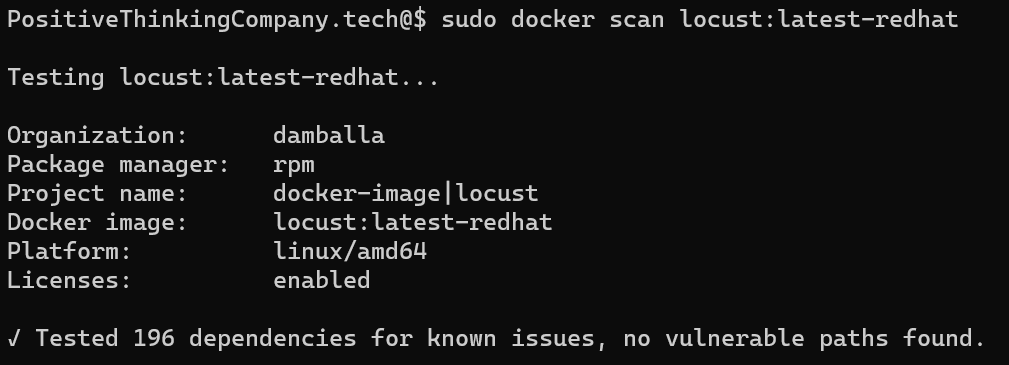

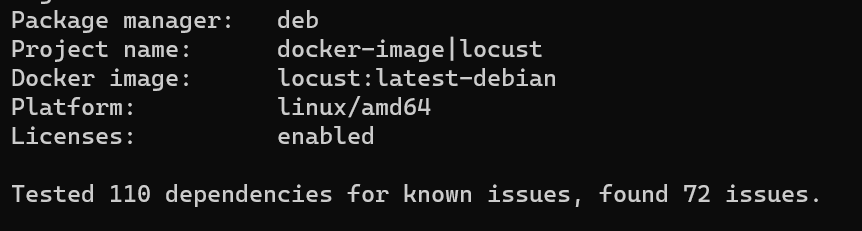

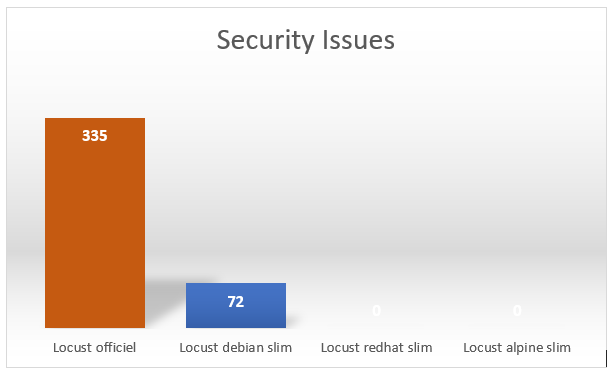

In terms of vulnerabilities, it’s also very equivocal:

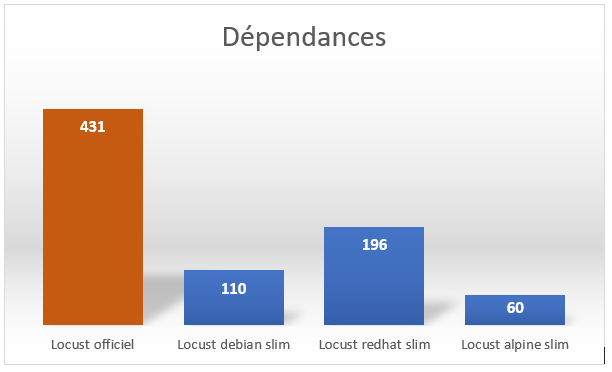

We will notice the number of dependencies, which is significantly lower.

If we take the debian base (base used by the original project):

There are still a number of problems, but we have reduced the attack surface. We count 110 dependencies only (compared to 431 in the official image) and security problems (72 compared to 335).

It is interesting to check regularly the images used since it is possible to have substantial gains:

What should be noted is that the mentioned images and corresponding dockerfiles are not ready for production. It is necessary, for example, not to have the latest images, but images corresponding to the tag of the application, and therefore to restrict to the construction, the use of the appropriate tag.

Also, we are talking about a tool that is quite simple to use, and that looks more like an application packaging, than a specific service, without taking away the interest of adding health check and liveprobe.

Conclusion

In conclusion, we can see that the reduction of the size of the image, as well as its relative security via the limitation of integrated packages is relatively simple to implement, if only, as in this case, by reviewing the construction process very slightly, and this, without changing the result for the user.

Discover our Software Product Engineering services and practices.